This article describes the basic idea of developing an ETL system for loading data into the Data Warehouse. The main development tasks are presented, and the process of implementing the ETL system is described.

Keywords: ETL system development, Data Warehouse, BI, DBMS, API, ODI, HTTP Basic Authentication, Target, SAP BO, DMZ.

Data Warehouse is a specialized information database designed and intended for reporting and business analysis to support decision making in any company. It is built on the basis of database management systems and decision support systems.

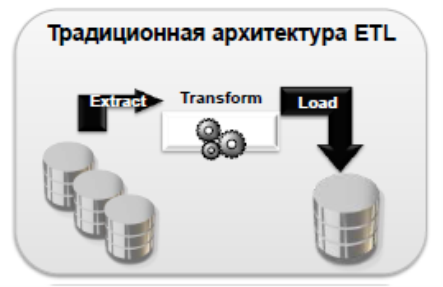

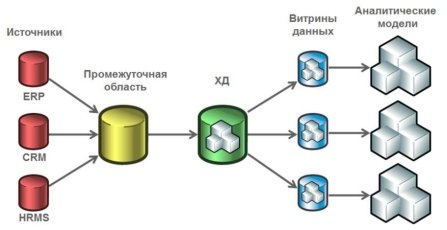

Fig. 1. ETL (Extract, Transform, Load)

ETL is one of the main processes in data warehouse management, which includes the following tasks (Fig. 1):

– extracting data from external sources (database tables, files);

– transformation and cleaning of data according to needs;

– loading the processed information into the data warehouse.

The following characteristics of an ETL system can be distinguished:

– Better access to company data;

– Ability to create reports and indicators that can drive the strategy;

– In his article «An Overview of Data Warehouse and OLAP Technologies», Chaudurian Dayal explained that a data warehouse is a separate database that analysts can query at their discretion without affecting the operation of online transaction processing (OLTP). [3]

Next, the main idea of the ETL system will be presented, as well as the development process.

The main idea of the ETL system

ETL allows businesses to combine data from multiple databases and other sources into a single repository with data that has been properly formatted and qualified to prepare for analysis. This single data repository provides simplified access for analysis and further processing.

Development process

When developing an ETL system, you need to take into account the functional requirements that implement the logic of the system. They reveal the tasks that need to be implemented in the development of an ETL system.

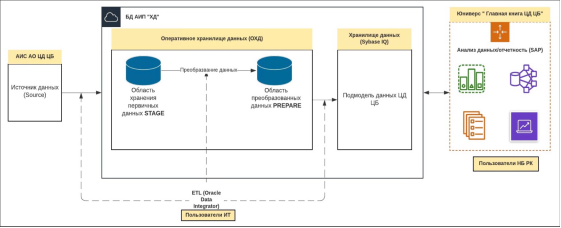

1) Extract. At the first stage, data is retrieved from the source system to the area of temporary data storage — STAGING AREA (Fig. 2), which is designed for temporary storage of data retrieved from source systems. This area is an intermediate layer between the source and the data warehouse.

Fig. 2. Extracting data to the STAGE area of temporary data storage

2) Transformation. The transform function converts the extracted data into a suitable format for analysis and storage. This process involves converting the extracted data from its old structure to a more denormalized format. This step depends on the target database.

Fig. 3. Data transformation, creation of analytical data marts

3) Loading. The load function performs the process of writing the converted data to the database. This may take several steps, as each step may complete the data in a different way. The default setup is to have raw, staging, and production databases. As a rule, the initial loading of all information is configured, followed by periodic loading of incremental changed data. [2]

ETL-tools

Different products can be used as an ETL tool. One of them is Oracle Data Integrator. Oracle Data Integrator (ODI) is an enterprise-grade integration platform that extracts, transforms, and loads data from a variety of sources: databases, files, and more.

– AIS «DW» (Target). Data Warehouse (DWH).

– Data analysis (SAP BO). Using the data from AIP «KhD», the areas of analysis, reporting and data marts are configured. Subsequently, users can independently build the necessary reporting and conduct multivariate analysis. SAP Business Objects (SAP BO) is used as analysis tools.

– DMZ (Demilitarized Zone — demilitarized zone) is a technology for ensuring the security of the internal network when providing access to external users to certain resources of the internal network (such as mail, WWW, FTP servers, etc.).

– API (Application Programming Interface) — it is a software intermediary that allows two applications to communicate with each other.

– HTTP Basic Authentication provides an authentication mechanism. This is a simple authentication scheme built into the HTTP protocol. The client sends HTTP requests that contain the word Basic and the string username:password.

Conclusion

Modern corporations require easy and fast access to data. This has led to a growing demand for transforming data into self-service systems. ETLs play a vital role in this system. They provide data analysts and data scientists with access to data from multiple application systems. This is of great importance and allows companies to get new ideas. The article describes the reason for implementing an ETL system and presents the key development points. The result of the development of this system is the preparation of reports and business analysis from the transformed and aggregated data of the data warehouse in order to support decision-making in the company.

References:

1. David Taylor, [Electronic resource] URL: https://www.guru99.com/etl-extract-load-process.html (retrieved: 12.02.2022)

2. IBM Cloud Education, [Electronic resource] URL: https://www.ibm.com/cloud/learn/etl (retrieved: 28.04.2020)

3. Zhao, Shirley (2017–10–20). «What is ETL? (Extract, Transform, Load) | Experian». Experian Data Quality. (retrieved: 12.12.2018)

- Trevor Pott (4 Jun 2018). «Extract, transform, load? More like extremely tough to load, amirite?". www.theregister.co.uk. (retrieved: 12.12.2018)

- «ETL is Not Dead. It is Still Crucial for Business Success». Data Integration Info. 8 June 2020. (retrieved: 14.07.2020)