The present article deals with the problem of the influence of derivation (in particular, word formation) on the entropy and information content of a discourse in which derivative words are used. The dependence that when the entropy coefficient is reduced by 0.01 (or 1 %), the text content coefficient decreases by 0.025 (or by 2.5 %), provided that the length of the text fragments is not considered. The prospects of studying the derivative potential of language lexical systems are seen in our studies of the development of conceptual areas both in isolation and in comparison, of different linguocultures. The developed methodology of derivative potential determination can provide an impetus to research of derivative processes at conceptual and linguistic levels.

Keywords: derivation, information, inclusiveness, entropy.

Introduction

According to the grammatical-morphological type derivation is an important function of maintaining the “survivability” of the language, because, unlike the processes of the emergence of new words, morphological derivation preserves the principle of saving the language system. The emerging new secondary unit is viewed in two ways: on the one hand, it (the secondary unit) becomes a full-fledged element in the structure in which its entire interpretive and nominative potential is revealed at the syntactic and discursive levels, and secondly, despite its usefulness, the speaker (and especially the specialist) is able to isolate the elements from which it was created by performing a certain algorithm of linguistic operations. The second fact emphasizes the property of the language to isolate the elements of the world reflected in the derivational elements.

Problem Statement

The secondary unit increases the information component, which was absent in the base form of the word due to the derivational element (affix). This fact is vividly confirmed in the syntagmatic sense. For example, prefixal derivation can be considered as a process of adding extra information contained in the prefix to the base form of the word. As possessing certain semantics, the prefix reflects information about the surrounding world and presents it in a more concise form [1], [4], [7, p. 62]. For instance, the primary verb “to say” carries less information in comparison with its derivatives (dizer — predizer etc). The information in the derivative “predizer” is more compressed than in the periphrasis “to say something about the future”, which reflects an important property of the language system as a whole — the tendency to economize [10, p. 58].

Thus, French and Portuguese prefixes are characterized by the information that reflects situations of deprivation of something, or on the contrary, situations of acquisition.

Therefore, the text represents an inclusive system the elements of which contain more detailed or more concise information. Let us consider the phenomenon of inclusiveness of text elements and its functioning at the discourse level using some French and Portuguese examples.

Alors je me lance, dans le dés ordre, et tant pis si j’ai l’impression d’être tout nue, tant pis si c’est idiot, quand j’étais petite je cachais sous mon lit une boîte à trésors, avec dedans toute sorte de souvenirs, une plume de paon du Parc Floral, des pommes de pain , des boules en coton pour se dé maquiller, multi colores, un porte-clés clignotant et tout, un jour j’ y ai dé posé un dernier souvenir, je ne peux pas te dire lequel, un souvenir très triste qui marquait la fin de l’enfance, j’ai re fermé la boîte [13, p. 19].

Moreover, inclusiveness in a properly organized text is not immediately perceived by the speaker (listener); it begins to manifest itself only if there is a malfunction in any part of the text (as a result of interference, incorrect usage of a word or prefix). So, in the following language units, information is minimized to a prefix: « me lance», «le dés ordre», «se dé maquiller», « multi colores», « dé posé», « re fermé». In the example shown above, inclusiveness is manifested in the reduction of the meaning of “place” while it is actualized in another part of the text fragment: je cachais sous mon lit une boîte à trésors, avec dedans toute sorte de souvenirs; jour j’ y ai déposé.

There is a larger illustrative material from the French language: J’ai fini par re venir pour de bon, j’ai re trouvé Paris, une chambre d’enfant qui ne me re ssemble plus, j’ai demandé à mes parents de m’in scrire dans un lycée normal pour élèves normaux. Je voulais que la vie re prenne comme avant, quand tout semblait simple et s’en chaînait sans qu ’on y pense, je voulais que plus rien ne nous distingue des autres familles où les parents prononcent plus de quatre mots par jour et où les enfants ne passent pas leur temps à se poser toutes les mauvaises questions [13, p. 36].

Proceeding from the above, we can assume that at a discursive level, entropy is directly related to the informativeness of the text (with its meaning), in which one or another derivative is used: if the entropy decreases, then the informativeness of the text decreases. That is, the values of derivatives and their influence on the meaning are fully realized in the text. It is the text, as a totality of paradigmatic-syntagmatic links, that shows the effect of derivatives on informativeness. The decrease in entropy (that is, the increase in the number of variations or the misuse of word-forming elements) leads to the ambiguity of the information that the derivative contains, due to which the destructor of the meaning of discrimination and understanding can arise.

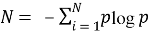

To determine how much the entropy of the derivative affects the semantic and informative side of the text, it is necessary to turn to the research of K. Shannon, according to which «entropy is a statistical parameter measured in a certain sense by the average amount of information per letter of a language text [11, p. 669]. Entropy was used by the scientist during the experiment: the possibility of predicting the English text — how accurate the prediction of the English text can be when the previous N letters of the text are known [11, p. 669]. The mathematical calculation of entropy is as follows:

here N is the coefficient of possible variations. In the present article, the coefficient N shows the number of variations of the primary and secondary bases, and the difference N — N shows how many points the entropy has changed under derivational processes.

For example, the English phrase someone will come out from the doors of the building is uninformative and has high entropy, since we do not know who will go out through the building doors (man, woman, child, etc.). Informativity increases if you specify what kind of building it is. For example, the phrase someone will come out from the doors of the military barracks is most likely about a male representative.

The relationship between entropy and the semantic content of the text is directly dependent. Therefore, entropy counting is very important for the practical analysis of the text for possible errors. In this case, due to the prediction of the decrease in entropy due to the misuse of word-forming elements, one can understand how much the informative (semantic) side of the text (discourse) will decrease. If we consider the ratio of the entropy of the derivative and informativity, then it is also combined as the syntagmatics and the paradigmatics are correlated. Entropy is the paradigmatic relation of linguistic units (cf.: run: run, run, run, etc.), informativity is a syntagmatic attitude (cf.: I reached the station first / I escaped from the station first / I ran across the station first and t.).

The calculation of informativeness is carried out according to the following formula of K. Shannon:

I = — ( p 1 log 2 p 1 + p 2 log 2 p 2 +... + p N log 2 p N ),

where p i is the probability that the i th message is allocated in the set of N possible derivatives.

Proceeding from this, the following relation is formed:

showing how much percent of the text's information will change if the entropy changes by n percent. Such mathematical modeling of derivational processes proves effective for: 1. forecasting possible errors when using derivatives in texts; 2. to understand what effect derivatives have on their real use in discourse; 3. to understand how much the information content of the derivative itself is increased in comparison with the primary unit.

In the investigated case, the information coefficient of the non-transformed and transformed text is 271.187256086867 and 260.489136780929, respectively, that is, the information loss coefficient is 0.96. The entropy coefficient in the text under study is 4,201437690015 and 3.892360615225. The difference in the coefficients is 0.92 This difference reflects the fact that information is lost by regressive convolution by one (1) element. For the proof, we give an example with a similar prefix, but the length of the explored text segment is longer.

Conclusion

So, the inclusiveness of the text structure correlates with the contextual functioning of linguistic units and their tendency to economize, while semantic factors act at all levels of the linguistic system, which is ultimately subject to the correct formation of speech and discourse. As a result, discourse is a self-contained system with the highest degree of inclusiveness of various integral and differential word-formation and syntactic structures. The study proved the derivative to have entropy. In this case, the entropy of the derivative is higher than in the primary unit. Accordingly, the use of derivatives in speech (written and oral) increases the informativity of the latter. On the basis of the “entropy — informativity” correlation it was determined that, to a greater extent, it is entropy that affects informativity, since with decreasing entropy by 1 %, informativity decreases by 2.5 %. The process of decreasing entropy can lead to the appearance of pseudo-information and implicitness, which can be overcome due to a larger context. It was also revealed that semi-compressed propositions have increased entropy.

References:

- Câmara, Jr. J. M. Estrutura da Língua Portuguesa / J. M. Jr. Câmara. Petrópolis: Editora Y Vozes, 1970. 78 p.

- Camus, A. La mort heureuse. Paris: Gallimard, 2010. 176 p.

- Coelho, P. O demônio e a Srta. Prym. Rio de Janeiro: Sextante, 2013. 176 p.

- Cunha, da A. S. C. Contribuição da gramática gerativa no ensino de morfologia derivacional / A. S. C. da Cunha. São Gonçalo: UERJ, 2009. 104 p.

- Eco U. Open work: form and uncertainty in modern poetics. St. Petersburg: Academic Project, 2004. 384 p.

- Favan, C. Apnée noire. Paris: Toucan noir, 2014. 384 p.

- Gréa, Ph. Les limites de l’intégration conceptuelle / Ph.Gréa // Langage. La constitution extrinsèque du référent. 2003. № 150. P. 61–74.

- Le Figaro. URL: http://www.lefigaro.fr/politique/le-scan/citations/

- Peytard, J. De l’ambiguïté sémantique dans les léxies préfixées par auto- / J. Peytard // Langue française. La sémantique. 1969. № 4. P. 88–107.

- Schmidt, L. C. A forma e o uso dos prefixos PRÉ- e PÓS- no português falado no sul do Brasil / L. C. Schmidt // Letras de Hoje. 2005. № 141. P. 57–72.

- Shannon K. Works on the theory of information and cybernetics. Moscow: Foreign Literature Publishing House, 1963. 832 p.

- Victorri, B. Les grammaires cognitives. La linguistique cognitive / B. Victorri. Paris: Editions Ophrys, 2004. P. 73–98.

- Vigan, De D. No et moi. Paris: JC Lattès, 2007. 384 p.