In today's rapidly changing technological landscape, companies seek ways to boost performance, streamline processes, and improve employee productivity to achieve greater efficiency and sustain business growth. The use of advanced analytics has become increasingly popular, helping organizations unlock these benefits. However, the successful adoption of data science capabilities requires companies to have a solid understanding of analytics goals and change management requirements.

This research analyzes four areas of advanced analytics with practical implications in the CPG industry: market basket analysis, predictive product recommendations, advanced suggested order quantity for e-commerce, and price elasticity modeling. It examines their potential to create value for businesses and improve productivity. The methodology used in this research includes empirical analysis, historical data analysis, regression, and statistical analysis. The purpose of this research is not to delve into the intricacies of data science and statistics but rather to understand the applicability of use cases in real business and translate potential data science benefits into business language.

Initial findings show that these data science tools can reveal new insights and highlight growth opportunities. Predictive product recommendations, if followed, could increase sales by up to 300 %. For e-commerce, suggested order quantity (SOQ) could reduce out-of-stock occurrences from 15 % to 2 %. However, thorough planning and change management are essential to benefit from these tools. This article provides business leaders and analysts with valuable insights into how data science can drive growth and productivity and underscores the importance of data-driven decision-making and the challenge of changing the status quo.

Keywords: artificial Intelligence, insights, foresights, business growth, value driven operations.

Introduction

This paper reviews and explores the real use case of predictive analytics and statistical analysis implementation in trade marketing businesses to make forecasts in a company with a global presence. A systematic identification of data science methods and use cases is presented, with data drawn from marketing B2B systems. This paper does not explain the data science methods and processes in detail, as these are adequately covered in many books, such as those by Avrim Bloom and John Hopcroft [1]. Instead, it focuses on real business use cases, their applicability in real business scenarios, and other scholars' experiences in the data science field.

Trade marketing is a broad term associated with activities aimed at increasing product sell-through rates at the retailer, wholesaler, or distributor level. It encompasses various strategies and practices designed to ensure that products are available, visible, and appealing in the marketplace. This includes activities such as in-store execution, trade promotions, merchandising, channel management, and supply logistics.

The rationale for applying predictive analytics in trade marketing lies in the complexity of calculating the most effective marketing strategies manually. This complexity arises from the large variety of products on shelves and the diverse range of customers of different formats and sizes.

For example, in the CPG industry, relationships with customers are often based on pay-for-performance (P4P) or pay-for-compliance (P4C) models. Determining performance and compliance criteria is a labor-intensive process due to the large volume of input data. Predictive analytics significantly optimizes these processes by offering statistically accurate solutions based on various internal, external, and historical data.

The term predictive analytics is more commonly applied when moving beyond descriptive models to computational predictions based on data. Within trade marketing, predictive analytics can be created using data science methods like regression analysis and historical data sets. This term encompasses both explanatory data analysis and predictive computational methods. Explanatory data analysis is descriptive and backward-looking, while predictive computational methods are forward-looking and make forecasts. Unlike much of applied forecasting, conclusions in predictive analytics are drawn from data rather than human interpretation. Researchers must be comfortable with data-driven approaches and aware of overfitting, where the temptation might be to build a theory around the data and then modify the data to support the theory.

For predictive analytics to be effective, it is essential to have clean and reliable descriptive data from corporate information systems or data warehouses. Handling large, varied, and complex internal and external data is challenging in the diverse and evolving field of trade marketing. Normally, it is not just one source of data but diverse data sources, such as trade execution data, pre-computational analysis data stored in separate systems, data provided by customers, external data like Nielsen, ERP systems data, etc. For this analysis, golden data sets containing diverse examples of data were created and stored in information systems, along with additional calculated data from these systems.

The practical application of predictive analytics in trade marketing can be quite broad. It can be used to classify event outcomes, such as sales trends, recommended products, stock levels, and customer behavior. It can forecast numeric values, such as demand forecasts or customer lifetime value. Additionally, it can identify anomalies, such as sudden drops in sales or unexpected inventory shortages, and group data clusters, such as customer segmentation for targeted marketing campaigns. It can also be used for forecasting time series, helping businesses anticipate future trends.

All predictive analytics models begin with a construction process. The Cross-Industry Standard Process Model for Data Mining (CISPM-DM) [2] outlines the steps for internal data, including understanding the context and data, preparing the data, modeling, evaluation, and deployment. Domain experts are required to categorize and tabulate variables into attributes, descriptors, and features. Outliers should be identified, as they can significantly impact predictive accuracy. Once data is prepared, predictive models can be built.

In reviewing advanced analytics methods, this paper used existing literature by including studies from scholars who have used data-based predictive analytics to investigate similar questions. By collecting and examining other scholars' techniques, it aims to facilitate their adoption and application in research and practical settings.

Methodology

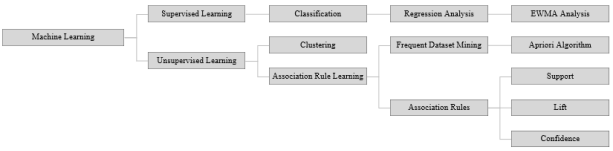

To identify the methods, a broad literature review was conducted using scholarly articles [3], [4] to identify the most important methodologies of machine learning analytics in trade marketing. These methodologies are detailed in later sections of the paper. This review followed the data science methods and rationales proposed by Kelleher, John D., et al. [5].

Association Rules and Apriori Algorithm

Market Basket Analysis (MBA) is a data mining technique used to identify associations between products frequently purchased together. The primary algorithm employed in MBA is Apriori, which operates in two main phases. First, it identifies sets of items that co-occur in transactions at a frequency exceeding a predefined minimum support threshold. Then, using these frequent itemsets, the algorithm generates association rules that quantify the likelihood of products being purchased together.

The resulting association rules are typically expressed in the form «If item A is purchased, then item B is likely to be purchased with X % confidence». These rules provide quantitative insights into customer and consumer purchasing behavior, enabling companies to optimize product placement, promotions, and inventory management strategies.

For instance, in MBA, product mix likelihood helps adjust predictive models to incorporate the additional factor of customer or consumer preferences, thus making the prediction models based on regression analysis more accurate.

Regression Analysis

Product recommendations based on historical sales data are a classical regression problem. This technique constructs predictive models that forecast suggested quantities for each product while accounting for various influencing factors. The effectiveness of regression analysis in this context stems from its ability to establish clear relationships between variables and provide accurate estimates of future product demand.

By analyzing past sales patterns and relevant variables, regression models can identify trends and correlations that inform product recommendations. These models consider multiple factors simultaneously, such as seasonality, pricing, product mix, and marketing promotions, to generate more comprehensive and accurate forecasts.

EWMA

The Exponentially Weighted Moving Average (EWMA) is a useful technique for calculating suggested order quantities (SOQ) in inventory management or B2B/B2C e-commerce sales, where computational opportunities using broad sets of data may be limited. For example, SOQ in e-commerce websites should be calculated almost in real-time. EWMA stands out because it assigns more weight to recent sales data, allowing it to quickly adapt to changing demand patterns. This responsiveness is very important for marketplaces, where users experience a vast number of suggestions while browsing websites and consistent UX is important.

By incorporating newer information and giving it higher weight, the EWMA technique helps businesses stay agile in areas where it is used. EWMA can spot emerging trends or sudden shifts in customer behavior faster than methods that treat all historical data equally. This means companies can adjust their inventory levels or suggest order quantities more accurately and promptly.

Logarithmic Regression

Logarithmic regression analysis was selected for modeling price elasticity. In price elasticity analysis, non-linear dependencies between price, profit, and demand are evaluated, capturing the relationship between variables as a classical regression problem. The model's ability to handle non-linear relationships makes it especially useful in real-world scenarios, where the impact of price changes on volume isn't always straightforward or proportional.

All the mentioned methods are subsets of AI and machine learning. I have visualized their positioning in Figure 1 below for your convenience.

Fig. 1. Methods and their relations within ML hierarchy

Market basket analysis

Market Basket Analysis (MBA) is a powerful method within data mining that is pivotal in understanding customer or consumer purchasing behavior. According to another scholar article [6] this method is innovative and can enhance customer loyalty for both online and online customers. It aids businesses in identifying the relationships between different products purchased together, which can unlock strategic decisions in inventory management, product placement, customer relations, and demand forecasting. MBA helps resolve traditional business questions such as understanding the buying behavior of customers.

The primary objective of MBA is to identify product associations, thereby enabling businesses to:

– Increase revenue through effective cross-selling and up-selling strategies.

– Optimize product placement to enhance the customer shopping experience.

– Improve inventory management by predicting product demand.

– Personalize marketing campaigns based on customer purchasing patterns.

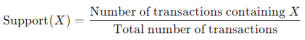

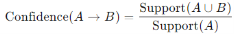

MBA relies heavily on transactional data, which includes detailed records of customer purchases. The core concepts and algorithms used in MBA include Support, Confidence, and Lift. These metrics help quantify the relationships between products. Let's explore these concepts in more detail.

Support: Support for a single product, in simple terms, is the measure of how much this product contributes to overall sales. It is the frequency of transactions containing this product.

Confidence, in turn, is not about a single product but about a combination of products. It measures the likelihood that a product is purchased along with another product. In the context of two products, confidence can be simply explained with the following formula: if the confidence equals 0.75, it means that 75 % of the time when customers buy Product A, they also buy Product B.

Lift shows how the presence of one product increases the chances of buying the other product. If the lift for the “A+B” combination equals 1.2, it means customers are 20 % more likely to buy Product B together with Product A compared to buying Product B without considering A purchases.

While researching Market Basket Analysis (MBA) and overall sales predictions, I found that in the context of trade marketing, the lift metric serves a dual purpose. Lift not only measures the likelihood of products being purchased together but also reflects potential customer or consumer preferences for specific product combinations, assuming orders are not placed randomly. This preference pattern may be driven by customer or consumer behaviors and outlet factors.

In essence, a higher lift indicates a stronger recommendation for supplying the bundle, as these combinations demonstrate strong preference from the customer or consumer and tend to have higher turnover.

In the analysis I conducted in real business environments, the lift for product combinations accounted for up to 300 % for combinations of two products and up to 70 % for combinations of three products. Exploring higher combinations of products, such as four or five, doesn’t reveal many insights and patterns in purchasing behavior, so I would not recommend going above combinations of three products in MBA analysis.

While doing the research, I explored how the likelihood from MBA can be transformed into quantity recommendations. To bridge MBA with predictive recommendations, we essentially need to address a regression problem. The goal is to integrate MBA insights and likelihoods into a regression model, capturing the relationship between frequently bought-together products and their quantities based on historical data.

Bridging market basket analysis and predictive product recommendations

Demand prediction in retail has become a very popular area with a lot of literature and scholarly articles written. When researching the topic of demand prediction, I used best practices as described in the book «Demand Prediction in Retail: A Practical Guide to Leverage Data and Predictive Analytics» by M. C. Cohen et al. [7]. Market Basket Analysis (MBA) identifies likely product combinations, providing insights into customer and consumer purchasing patterns. Predictive product recommendation, on the other hand, focuses on forecasting specific quantities of products to be recommended. By integrating MBA insights into regression analysis, we can create a robust model that not only identifies frequent (preferred) product combinations but also predicts the quantities to be stocked or recommended.

Identify Product Recommendations Using MBA

For example, if MBA indicates a strong association between Product A and Product B with a confidence of 0.75 and a lift of 1.2, this means Product B is likely to be bought 75 % of the time when Product A is purchased, and the likelihood of buying Product B increases by 20 % when Product A is bought.

Build a Predictive Model Using Regression Analysis

I used linear regression for this analysis due to its simplicity, interpretability, and effectiveness in modeling relationships between quantities. Linear regression quantifies the relationship between two variables, with easily interpretable coefficients that show how changes in one product's quantity affect another's. It is computationally efficient, making it suitable for large datasets in trade marketing and distribution.

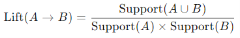

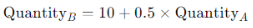

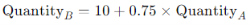

The regression model can be formulated as follows:

Where:

β 0 — Intercept, also called dependent variable (output) when all independent variables (inputs) are zero. In our context it represents the baseline quantity of Product B sold when no units of Product A are sold.

β 1 — Coefficient: Indicates the expected change in the quantity of Product B for each unit increase in the quantity of Product A.

ϵ — Error Term: Accounts for the variability in the quantity of Product B not explained by Product A.

The error term represents all other factors affecting the sales of Product B that are not directly linked to the sales of Product A. These factors could include promotional activities, seasonal effects, economic conditions, or customer preferences. In the regression model, values influenced by the error term may appear as outliers. This means they deviate significantly from the predicted values, indicating the impact of those external factors.

Suppose the regression model based on historical data is:

If MBA indicates a confidence of 0.75 for Product A and Product B, we adjust our predictive model to better reflect this relationship. For instance, we might increase β 1 to 0.75

By integrating MBA insights into regression analysis, we create a predictive model that leverages both the likelihood of product associations and the specific quantities to be recommended. This bridge between MBA and regression analysis enhances the accuracy of inventory management and personalized recommendations can unlock better business decisions and increased customer satisfaction.

Advanced SOQ

The calculation of suggested order quantities (SOQ) in modern e-commerce platforms presents a challenge in balancing user experience with computational efficiency. Traditional methods often rely on simple averages of historical buying behavior, potentially introducing bias and overlooking crucial factors such as purchase history, bulk discounts, product shelf life, seasonality, demographics, and others. However, incorporating more complex and pre-calculated factors increases computational complexity.

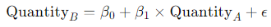

Through research and interviews with e-commerce department managers, I identified several key factors that marketing perspectives deem essential for SOQ calculations: new product introductions (NPI), promotions, product shelf life, and product capping. Additionally, I found that applying exponentially decreasing weights to historical data—giving more importance to recent data (the weights assigned to data points decrease exponentially as you go back in time)—can better align suggested quantities with marketers' expectations regarding product presence, promotions, and overall customer contract management in terms of performance and compliance.

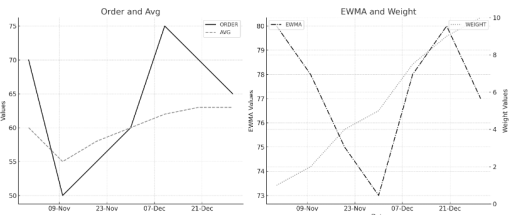

Figure 2 below compares two approaches using the same dataset: a traditional SOQ method (left chart) based on simple averages, and an adjusted SOQ method (right chart) incorporating additional factors (NPIs, promotions, end-of-life products) and exponential weighting. The adjusted model, which also considers customer store capacity for B2B e-commerce, demonstrated quantity uplifts ranging from 6 % to 56 % compared to the traditional approach.

This comparison highlights the potential benefits of a more nuanced approach to SOQ calculations in e-commerce platforms, balancing computational efficiency with improved accuracy and alignment with marketing objectives.

Fig. 2: standard (average) and advanced (ewma) SOQ

Price elasticity

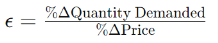

Price elasticity is a concept in economics that measures how sensitive demand for a product or service is to changes in its price. In the context of Revenue Growth Management (RGM), understanding price elasticity is crucial for optimizing pricing strategies and maximizing revenue (David R. Rink et al., 2016) [8].

Price elasticity is typically expressed as a ratio of the percentage change in quantity demanded to the percentage change in price. If this ratio is greater than 1, the demand is considered elastic (sensitive to price changes), while a ratio less than 1 indicates inelastic demand (less sensitive to price changes):

Where:

– Δ Q = Change in quantity demanded

– Q = Initial quantity

– Δ P = Change in price

– P = Initial price

Leveraging price elasticity data, Revenue Growth Management (RGM) department can develop more sophisticated, data-driven strategies that balance volume, price, and product mix to drive sustainable revenue growth. Automating price elasticity calculations via data science techniques doesn't pose significant technical challenges but allows companies to move beyond simple Excel calculations on “cost plus pricing” to a more nuanced and visualized understanding of how pricing decisions impact overall business performance. With the help of data science analysis, price opportunity ranges can be easily visualised. For better precision, marketers or finance teams can ask data science teams to incorporate additional factors for elasticity models using a multiple regression approach. Let's go beyond just the elasticity formula and see how the multiple regression model steps may look like.

Data Collection : Collect historical data on price, sales, and profit per product. Additionally, marketers can gather data that they think may be important for elasticity modeling, such as seasonality, competitors' prices, consumer preferences (sentiment indices, survey results, etc.), new product introductions (NPIs), and other relevant factors (economic indicators, marketing spend, promotional activities, etc.).

Data Preparation : Ensure that the collected data is aligned, cleaned, and reliable. Data should be normalized and standardized where appropriate, and missing values should be handled through interpolation, imputation, or exclusion.

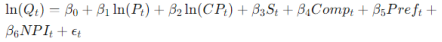

Constructing the Regression Model : Inclusion of additional independent variables (additional factors) into the regression model requires the use of the natural logarithm. The natural logarithm (ln) is used in multiple regression, especially for price elasticity analysis, because it allows us to interpret coefficients as percentage changes. This makes it easier to understand how much sales change when the price changes by a certain percentage, rather than by a specific currency amount.

Where:

– Q t = Quantity demanded at time t

– P t = Price at time t

– CP t = Competitors' prices at time t

– S t = Seasonal variables (can be dummy variables e. g., 1 for winter, 0 otherwise)

– Comp t = Variable for competitors' actions (e.g., price cuts, promotions, can be dummy variable)

– Pref t = Consumer preference index at time t

– NPI t = Variable for new product introductions (e.g., 1 if a product is a new product, 0 otherwise)

– ϵ t = Error term

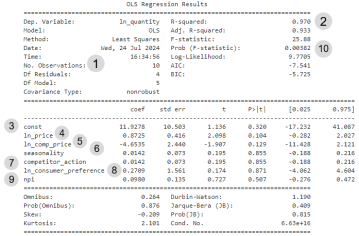

Regression models require software tools such as Python, R, or any statistical software to estimate the model coefficients. For the purpose of this article, I will not provide Python details or code, instead, I will focus on translating and evaluating the model results (see Figure 3 below) so that a reader can understand the application of price elasticity in real business environments.

Fig. 3: results of multiple regression model for one product

- Number of Observations: I consciously limited my data set for simplification and explanation purposes, so this is the number of records in your data set. Marketers and finance specialists may analyze data sets with thousands of records from diverse sources, which from a computational perspective should not pose any challenges.

- R-squared value: This is our elasticity calculated for the multiple regression model. The regression analysis results show that the R-squared value is 0.970, indicating that approximately 97 % of the variance in the dependent variable (ln(quantity)) is explained by the independent variables in the model. However, you may notice that in our case, elasticity is positive but below 1. This is because I consciously created such a borderline data set to tackle this scenario. If price elasticity is positive but below 1, it indicates inelastic demand. The implication of such situations will be researched in the next section.

- Intercept (const): The intercept is 11.9278, representing the expected value of ln(quantity) when all independent variables are zero. However, this value is not statistically significant with a p-value of 0.320. In statistical analysis, 0.05 is a commonly used threshold, but it's not magic. It's a guideline that helps researchers decide when they have enough evidence to say something interesting is happening in their data. So, if the p-value is below 0.05, it means that there's less than a 5 % chance we'd see these results if nothing was really going on.

- Coefficient for ln(price): The coefficient for ln(price) is 0.8725, suggesting that a 1 % increase in price is associated with a 0.87 % increase in quantity demanded, although this result is not statistically significant (p = 0.104).

- Coefficient for ln(comp_price): The coefficient for ln(comp_price) is -4.6535, indicating that a 1 % increase in competitors' prices is associated with a 4.65 % decrease in quantity demanded. This result is also not statistically significant (p = 0.129).

- Seasonality variable: The seasonality variable has a coefficient of 0.0142, indicating seasonal effects, but it is not statistically significant (p = 0.855).

- Competitor_action variable: The competitor_action variable has a coefficient of 0.0142 and is not statistically significant (p = 0.855).

- Coefficient for ln(consumer_preference): The coefficient for ln(consumer_preference) is 0.2709, suggesting that a 1 % increase in consumer preference is associated with a 0.27 % increase in quantity demanded, but this result is not statistically significant (p = 0.871).

- NPI (new product introduction) variable: The NPI variable has a coefficient of 0.0980 and is not statistically significant (p = 0.507).

- F-statistic: The F-statistic for the model is 25.88 with a p-value of 0.00382, indicating that the overall model is statistically significant, meaning that the independent variables jointly explain the variance in the dependent variable.

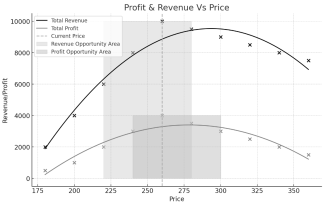

The best part I like after all the research is how price elasticity and related opportunities can be visualized just by using regression analysis with clear areas of growth, maturity, and decline for both volume and profit due to price changes—see Figure 4. On the diagram below, you can see that according to the regression analysis, a price limit of 275 is a clear threshold after which both revenue and profit start to decline.

Fig. 4: Growth, Maturity and Decline areas of Profit & Volume

Discussion

The purpose of this study was to explore how predictive analytics methods can be utilized in trade marketing to forecast various business outcomes. The main research questions centered on identifying effective data science methodologies for improving product sell-through rates, optimizing inventory management, and enhancing pricing strategies.

Market Basket Analysis (MBA): The findings suggest that MBA can be beneficial in identifying strong associations between frequently purchased products, aiding in effective cross-selling and up-selling opportunities. Additionally, MBA can serve as a precursor for predictive product recommendations by incorporating lift and confidence findings into a regression model for recommendations.

Regression Analysis: This method demonstrated its utility in predicting product demand based on historical sales data, incorporating factors such as seasonality, competitors' data, and trade promotions.

Exponentially Weighted Moving Average (EWMA): The EWMA method showed applicability in calculating suggested order quantities (SOQ) by adapting to recent sales trends without overloading computational capacities, thus maintaining a seamless user experience.

Logarithmic Multiple Regression: This method effectively modeled price elasticity, highlighting the non-linear relationship between price changes and demand.

Overall, the study's findings underscore the value of data science for specific marketing processes such as demand forecasting, inventory management, product recommendation, and customer engagement. These use cases can be leveraged through easy-to-understand methods.

Cross-Functional Collaboration

The research highlighted the importance of cross-functional collaboration in creating advanced analytics capabilities, which requires coordinated efforts across marketing, finance, sales, supply chain, IT departments, and specifically data science teams. This collaboration ensures accurate data collection, sharing, and interpretation, leading to more effective decision-making and implementation of predictive models.

Comparison with Other Research

The results of this paper align with other literature and scholarly articles on predictive analytics. Similar to findings by Wang, George, et al. [9], this study confirmed the efficiency of regression models in demand forecasting. However, the incorporation of MBA into predictive recommendations represents an advancement over traditional models, aligning with recent studies that emphasize the importance of integrating multiple data science techniques for enhanced accuracy. The emphasis on cross-functional collaboration supports findings by other scholars [10] who argue that the success of data-driven strategies often depends on effective communication and cooperation between departments. This integrated approach is consistent with the work of Provost, Foster, and Tom Fawcett [11], who highlight the need for a systemic application of data science across business functions.

Limitations

Several limitations should be acknowledged. The study relied on a limited dataset, which may not fully capture the complexity of real-world scenarios. Additionally, the model assumptions, particularly regarding linearity or multiple regression analysis, may not always hold true. Future studies should consider larger datasets and explore non-linear models applicable to real business use cases to address these limitations.

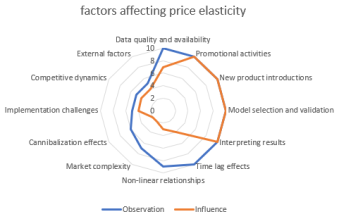

For example, in price elasticity modeling, assumptions about the factors that may influence elasticity were introduced. However, according to regression model results all introduced factors wer non-statistically significant. In addition, when researching the topic of price elasticity, I also used other scholars' articles [12], [13] and concluded that the challenges in data science projects are often split into business and technology-related. For instance, selecting the appropriate analytical model is a shared responsibility between the data science and business teams, allowing both teams to influence the decision. However, the implementation and delivery processes are primarily managed by the IT and data science teams, with business personnel having limited direct influence and primarily observing the process. For example, in the case of price elasticity analysis, while the model selection involves both business and IT teams, the actual delivery is more in the hands of IT and data science teams. This poses certain risks of misalignment between teams unless business people are sufficiently technically savvy to participate in the development and validation process for a model. This illustrates that delivering data science capabilities involves not only technological challenges but also organizational ones. These organizational challenges require tight cross-functional collaboration and the maintenance of technical knowledge within business teams. This ensures effective communication and cooperation, maintaining strong business and technical bridges and mitigating respective risks in complex organizational environments. [14]

To visualise above formulated challenges, I put some factors on the example of price elasticity modeling and structured them according to my findings from the real use cases (see Figure 5).

Fig. 5: factors affecting price elasticity

As I explored in the above sections, in some cases, elasticity may be positive but below 1.

Interpretation : A positive elasticity below 1 means that when the price increases, demand also increases, but by a smaller percentage than the price change. This is an unusual scenario, as typically, we expect demand to decrease as the price increases (negative elasticity). Possible explanations may include luxury or prestige goods (higher prices might signal higher quality or exclusivity), Giffen goods (rare cases where a price increase leads to higher demand due to income effects), and the expectation of future price increases (people might buy more now if they expect prices to rise further).

Revenue Implications : Increasing the price will lead to an increase in total revenue. For example, if elasticity is 0.5, a 10 % increase in price would lead to a 5 % increase in quantity demanded.

Marketing Perspective : This situation might suggest an opportunity to increase prices without losing sales volume, potentially leading to higher profits.

Caution : Verify that this positive elasticity is not due to data issues or inconsistency factors in the analysis. It's crucial to validate your data and model to ensure the result is not due to statistical artifacts or misspecification.

Further Investigation : Explore other factors that might be influencing demand, such as changes in consumer preferences, marketing efforts, or the competitive landscape. While this scenario is possible, it's relatively uncommon. If encountered, carefully validate your data and model.

Future Research Directions

Future research should focus on expanding the datasets for experiments and include longer time frames to validate the data science findings. Exploring advanced machine learning techniques, such as neural networks and ensemble methods, could be considered to provide deeper insights into complex market behaviors and sentiments. Additionally, investigating the impact of external factors like economic conditions, consumer sentiment, environmental factors on predictive models would offer a more holistic view of market dynamics.

Furthermore, future research should examine the best practices for fostering cross-functional collaboration and its influence in the implementation of predictive analytics. Understanding how different departments can effectively communicate and work together will be crucial for maximizing the benefits and reducing related risk in data, organisatinal etc.

By addressing these areas, future research can build on the foundations laid by this study, further enhancing the application of predictive analytics in marketing and finance.

Conclusion

This research has provided a comprehensive review of the application of predictive analytics in marketing, revealing that data-driven methods may significantly optimize marketing approaches. Key findings include the effectiveness of Market Basket Analysis (MBA), Regression Analysis, Exponentially Weighted Moving Average (EWMA), and Logarithmic Regression in improving decision-making processes through accurate forecasting based on historical data and various internal and external factors.

The primary objective of this study was to explore real business use cases of predictive analytics in marketing, highlighting their practical applications without delving much into the specific data science methods themselves.

The implications of this research are essential for readers who want to understand if predictive analytics can help optimize business routines, classify and group event outcomes, forecast numeric values, and identify patterns. This knowledge may help marketers and finance professionals stay informed and empowered about new tools and approaches that may enable strategic decisions and improve operational efficiency and employees productivity.

However, the study acknowledges certain limitations. The reliance on historical data means that predictive models may not fully account for unexpected future events or rapidly changing market conditions. Additionally, the complexity of data preparation and model construction requires significant expertise and resources.

In a broader context, despite presented challenges, this research underscores the growing importance of predictive analytics for businesses. As businesses increasingly turn to data-driven methods to navigate complex market environments, the insights provided by this study offer guidance for leveraging advanced analytics to achieve better outcomes.

In conclusion, the integration of advanced analytics into business operations represents a significant advancement in optimizing traditional ways of working. By adopting data-driven models and various predictive techniques, businesses can perform that extra mile which is the cornerstone of the never-ending journey of seeking operational efficiency.

References:

- Blum, Avrim, John Hopcroft, and Ravindran Kannan. Foundations of data science. Cambridge University Press, 2020.

- Chapman, Peter. “CRISP-DM 1.0: Step-by-step data mining guide.” (2000). https://www.semanticscholar.org/paper/CRISP-DM-1.0 %3A-Step-by-step-data-mining-guide-Chapman/54bad20bbc7938991bf34f86dde0babfbd2d5a72

- Brady, Henry E. «The challenge of big data and data science». Annual Review of Political Science 22.1 2019https://www.annualreviews.org/content/journals/10.1146/annurev-polisci-090216–023229

- Farayola, Oluwatoyin Ajoke, et al. «Advancements in predictive analytics: A philosophical and practical overview». World Journal of Advanced Research and Reviews, 2024 https://wjarr.com/content/advancements-predictive-analytics-philosophical-and-practical-overview

- Kelleher, John D., and Brendan Tierney. Data science. MIT press, 2018. https://books.google.com/books?hl=ru&lr=&id=UlpVDwAAQBAJ&oi=fnd&pg=PP7&dq= %22Data+Science %22&ots=vWkUZob49I&sig=-eqdO0r9LQ7MBNzGAfb4VMrOh7Y

- Zamil, A. M. A., A. Al Adwan, and T. G. Vasista. «Enhancing customer loyalty with market basket analysis using innovative methods: a python implementation approach». International Journal of Innovation, Creativity and Change 14.2 (2020): 1351–1368. https://www.academia.edu/download/95684375/IJICC_MBA_Python.pdf

- Cohen, Maxime C., et al. Demand prediction in retail: A practical guide to leverage data and predictive analytics. Springer, 2022. https://link.springer.com/book/10.1007/978–3–030–85855–1?trk=public_post_comment-text

- Rink, David. (2017). Strategic pricing across the product’s sales cycle: a conceptualization. Innovative Marketing. 13. 6–16. 10.21511/im.13(3).2017.01. https://www.researchgate.net/publication/320983199_Strategic_pricing_across_the_product's_sales_cycle_a_conceptualization

- Wang, George CS, and Chaman L. Jain. Regression analysis: modeling & forecasting. Institute of Business Forec, 2003 https://books.google.pl/books?hl=ru&lr=&id=dRQAkwHHmtwC&oi=fnd&pg=PR3&dq=regression+models+for+forecasting&ots=eo6wN2xVWp&sig=AvrrypgF74wZLKU_ER_RPfPviUA&redir_esc=y#v=onepage&q=regression %20models %20for %20forecasting&f=false

- Samir Passi and Steven J. Jackson. 2018. Trust in Data Science: Collaboration, Translation, and Accountability in Corporate Data Science Projects. Proc. ACM Hum.-Comput. Interact. 2, CSCW, Article 136 (November 2018), 28 pages. https://doi.org/10.1145/3274405

- Provost, Foster, and Tom Fawcett. Data Science for Business: What you need to know about data mining and data-analytic thinking. " O'Reilly Media, Inc»., 2013. https://books.google.pl/books?hl=ru&lr=&id=EZAtAAAAQBAJ&oi=fnd&pg=PP1&dq=data+science+ %2B+business&ots=ymXIWv2RB-&sig=j3Dnb5ULpJPhSLgl7pM8bFIYJnY&redir_esc=y#v=onepage&q=data %20science %20 %2B %20business&f=false

- Schroeder, Ralph. «Big data business models: Challenges and opportunities». Cogent Social Sciences 2.1 (2016): 1166924. https://www.tandfonline.com/doi/epdf/10.1080/23311886.2016.1166924?needAccess=true

- Brady, Henry E. «The challenge of big data and data science». Annual Review of Political Science 22.1 (2019): 297–323. https://www.annualreviews.org/docserver/fulltext/polisci/22/1/annurev-polisci-090216–023229.pdf?expires=1721899772&id=id&accname=guest&checksum=F77C46CE013CEE6B1189ABE4832EBDF2

- Cao, Longbing. «Data science: challenges and directions». Communications of the ACM 60.8 (2017): 59–68. https://dl.acm.org/doi/fullHtml/10.1145/3015456