Java for UI auto tests using Selenide plus Selenoid

Kirillov Roman Mikhailovich, technical team leader testing

Lemma Group LLC (Moscow)

Introduction to the topic

In modern software development, ensuring the reliability and stability of web applications has become a critical concern. Automation of user interface (UI) testing plays an essential role in achieving this goal, especially given the growing complexity and interactivity of web systems. Developers and analysts consistently strive to enhance the quality of digital services, and automated testing provides a scalable and systematic method to verify their functionality. This article explores the practical integration of Java with the Selenide and Selenoid frameworks to improve the efficiency, maintainability, and scalability of UI tests.

This research is aimed at computer science professionals, graduate students, researchers, and engineers involved in software testing and development. The paper systematically examines the capabilities of Java as a programming language for UI testing, with a special focus on its interoperability with Selenide and Selenoid. The objective is to provide a comprehensive understanding of these tools and demonstrate effective testing strategies through technical illustrations.

Java continues to maintain a strong position in the field of automated testing due to its platform independence, rich library support, and broad adoption in enterprise environments. The analysis begins with a brief justification for Java’s dominance in test automation. Subsequently, attention is directed toward Selenide—a high-level wrapper for Selenium WebDriver known for its simplified syntax and enhanced waiting mechanisms. Particular emphasis is placed on Selenoid, a powerful tool for executing UI tests in isolated containerized environments. Selenoid not only allows for parallel test execution but also supports integration with Selenium Grid and video recording capabilities.

The presented material is structured to provide both theoretical context and practical value. Key areas covered include the architectural foundations of test automation using Java, implementation patterns such as Page Object, integration with TestNG, and advanced configuration scenarios for running cross-browser tests using Selenoid. Test cases based on the website yandex.ru are used for illustrative purposes, reflecting realistic testing scenarios.

Furthermore, the article addresses specific challenges related to Safari browser testing and proposes viable workarounds. These include the use of real macOS machines or cloud platforms that offer Safari environments. The discussion also includes an overview of nuances in browser behavior that may arise in different environments, with recommendations on how to mitigate such challenges.

To provide a clear and logical structure, the content is divided into thematically organized sections. The first part presents the core principles of using Selenide, followed by an in-depth review of Selenoid. Then, the paper explores the application of the Page Object pattern and concludes with practical examples and references to relevant documentation and tools.

The findings and examples presented in this article are intended to serve as a practical guide for testers who wish to enhance their knowledge of automated UI testing using Java and related technologies. The content aims to bridge the gap between academic study and industrial application, offering insights that are directly applicable in professional practice.

Benefits and basics of Java for UI testers

Java has been employed in a substantial number of large-scale software projects due to the stability and maturity of its ecosystem. One of its distinguishing characteristics is a reliable and consistently evolving architecture. For novice testers, Java offers a relatively gentle learning curve owing to its logical syntax, extensive documentation, and rich community support. An additional strength of the language lies in its backward compatibility: core developers ensure that new language features do not disrupt the functionality of existing code. As a result, many libraries created more than a decade ago remain relevant and fully functional today.

In the context of web application testing, Java demonstrates considerable advantages through its comprehensive set of tools and frameworks. Build systems such as Maven and Gradle simplify dependency management and project configuration. Testing frameworks like TestNG provide flexible mechanisms for test grouping, parameterization, and execution control. Moreover, auxiliary libraries, including Apache Commons, facilitate operations with files, network connections, and other system resources. Collectively, these components form a robust foundation for the development of comprehensive test suites capable of addressing diverse aspects of user interface behavior.

The sustained preference for Java in UI test automation, despite the availability of alternatives such as JavaScript, Python, or C#, can be attributed to several fundamental principles. Among them is the enduring concept of «write once, run anywhere» supported by the Java Virtual Machine (JVM), which allows test code to be executed across multiple platforms with minimal modification. This feature significantly reduces platform-specific implementation overhead and enhances the portability of automated tests. Accordingly, tests authored in Java can be reliably executed on Windows, Linux, and macOS systems without requiring environment-specific adaptations.

Another factor contributing to Java’s continued relevance is the existence of a large and active community of testing professionals. Numerous technical blogs, forums, and online communication channels, such as specialized Telegram groups, serve as platforms for knowledge exchange and problem-solving. The accessibility of such resources facilitates continuous learning and prompt resolution of technical issues that may arise during test development and execution.

Subsequent sections of this paper will examine the practical implementation of Java in conjunction with the Selenide framework. Prior to that, however, it is important to develop a clear understanding of Selenide’s architectural principles and functional capabilities as a high-level abstraction over Selenium WebDriver.

Basic introduction to Selenide

Selenide is a specialized Java library that extends Selenium WebDriver and is designed to simplify the process of creating user interface tests. It introduces a higher level of abstraction over standard WebDriver operations and automates many of the configuration and waiting routines that would otherwise require manual implementation.

When working with Selenium directly, testers are responsible for managing multiple technical aspects, including browser driver setup, element visibility waiting strategies, and handling of exceptions caused by asynchronous loading or unstable network conditions. Selenide, by contrast, encapsulates these concerns within its API, allowing the tester to concentrate on validating functional outcomes rather than configuring execution contexts.

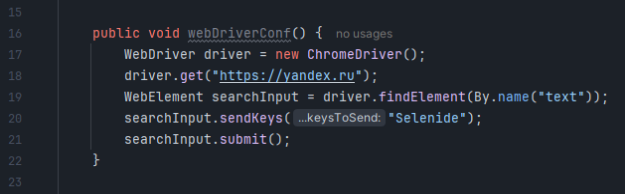

For example, when using Selenium, it is common to write code that explicitly sets up the WebDriver and configures timeouts

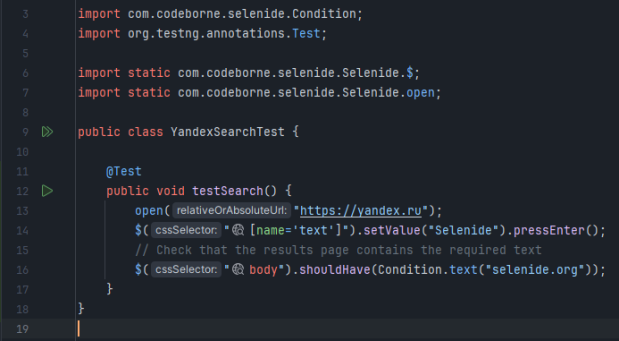

Fig. 1. Configuration WebDriver with Selenium

In Selenide, the same functionality is achieved with far more concise syntax. It is sufficient to define the target browser in the configuration object and use compact selectors and fluent methods to interact with page elements

Fig. 2. Example with Selenide

Selenide's default behavior includes automatic waiting for element presence and state. If a requested element does not appear immediately, Selenide continues polling for its presence within a default timeout window (typically four seconds). This eliminates the need for testers to configure explicit or implicit waits manually. For instance, the statement $("#element»).shouldHave(text(«expected»)) will continually evaluate the element’s content until the condition is satisfied or the timeout expires.

One of the most prominent syntactic enhancements provided by Selenide is the use of the $ and $$ operators to locate elements and collections of elements, respectively. These operators streamline the syntax and make the test code significantly more readable. The library also provides a range of methods tailored to common UI actions:

– setValue(String text) — enters text into the field.

– append(String text) — supplements existing text without overwriting it.

– pressEnter(), pressEscape(), pressTab() — emulation of pressing the corresponding keys.

– click() — standard click.

– hover() — mouseover.

– should(Condition...) — universal method, where you can pass a set of conditions (for example, visible, enabled, text(...) ).

– shouldBe(Condition...) and shouldHave(Condition...) — variations of the previous one to help express the check in a more readable form.

– uploadFromClasspath(String filePath) — loading a file stored in the project resources.

– download() — download. Allows you to check if the item actually gives the correct file.

These methods facilitate the development of concise, maintainable, and readable test cases. However, as testing requirements increase in complexity—encompassing diverse UI components, transitions, and dynamic content—it becomes essential to implement architectural design patterns such as Page Object. This ensures long-term maintainability and reusability of test logic.

Using Page Object pattern with TestNG

The Page Object pattern represents a widely adopted architectural design in the domain of automated UI testing. Its primary objective is to enhance code maintainability, modularity, and clarity by encapsulating the user interface structure and behavior within dedicated classes. According to this pattern, each significant page or page component in a web application is represented by a separate class. These classes include fields that correspond to web elements and methods that describe user interactions with these elements.

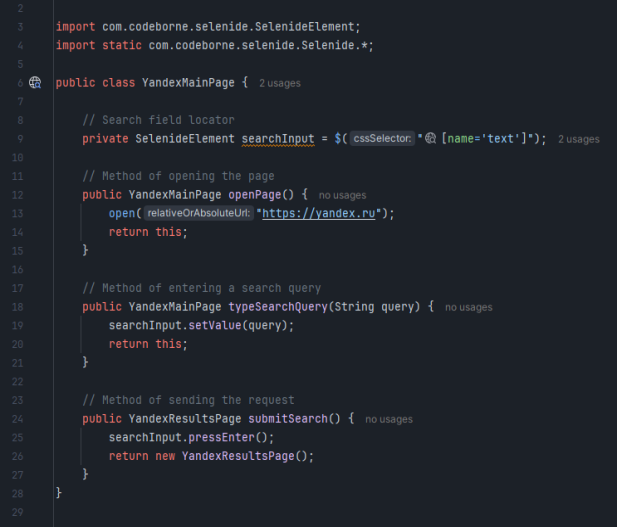

This approach is particularly effective in projects that involve complex user interfaces. It facilitates changes and updates to the UI by localizing modifications within a specific class, thus reducing the risk of introducing unintended side effects. For example, if the search input field on the Yandex homepage undergoes a change in its CSS selector or ID, only the locator definition within the corresponding Page Object class (e.g., YandexMainPage ) needs to be updated. All associated test cases remain unaffected due to their reliance on the centralized interface.

Fig. 3. Example of how methods work for the main page

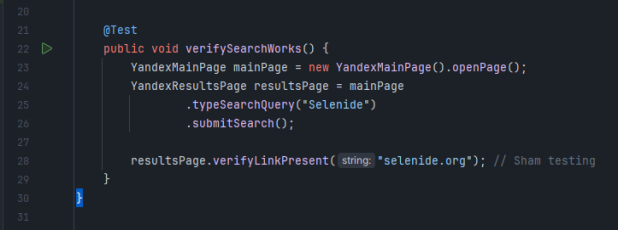

The test scenario, in this case, becomes highly readable and mirrors the logical flow of user actions: the homepage is opened, a query is entered into the search field, the Enter key is pressed, and a new results page is loaded. Each of these steps corresponds to a method invocation from the Page Object class. This abstraction enables domain-level test scripts that emphasize behavior over implementation details.

Subsequently, additional Page Object classes can be developed for subsequent pages, such as YandexResultsPage , encapsulating assertions and interactions relevant to search result verification. The logical sequence of actions across multiple classes enhances the test structure and reduces redundancy.

Fig. 4. Example of calling methods in a test scenario

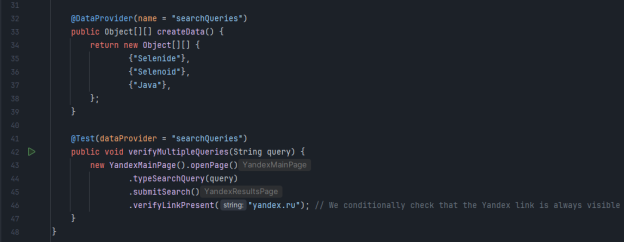

TestNG provides further enhancements for structured test execution. It supports grouping of test methods, dependency management, and the configuration of setup and teardown routines through annotations such as @BeforeMethod and @AfterMethod . One of the most powerful features of TestNG is its support for parameterized testing via the @DataProvider annotation. This allows a single test method to be executed with multiple input values, improving code reuse and enabling coverage of a broader set of scenarios.

For instance, it is possible to define an array of search queries that will be passed to the test method during execution. Each test run utilizes a different query, and the test logic remains unchanged.

Fig. 5. Example of using @DataProvider with a test scenario

This technique not only improves test coverage but also contributes to performance optimization by minimizing the number of individual test methods. It is especially effective in cases where functional behavior must be validated under varying input conditions.

In conclusion, the Page Object pattern, when combined with the configuration and parameterization capabilities of TestNG, provides a solid foundation for constructing maintainable, scalable, and readable UI test suites. The synergy of these techniques is crucial for large-scale automated testing frameworks that require consistency, reusability, and high clarity of test scenarios.

Delve into Selenide methods: small «features»

Although many test developers are familiar with the core features of Selenide, the framework offers a range of additional capabilities that address more complex and dynamic testing scenarios. These features become particularly valuable when dealing with modern web applications that utilize asynchronous content loading, dynamic DOM manipulation, and variable data representations.

A common task in automated testing is interacting with elements that are not immediately available in the DOM or are rendered asynchronously via AJAX requests. To address such cases, Selenide provides support for extended conditions. One such method is Condition.matchesText(String regex) , which enables verification of text content using regular expressions. This functionality proves especially useful when dealing with dynamic content such as timestamps, session identifiers, or user-specific data fragments.

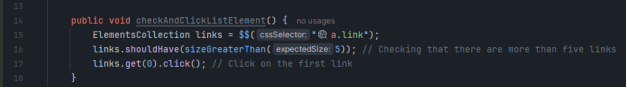

In addition to handling single elements, Selenide facilitates interaction with collections of elements through the ElementsCollection API. This allows testers to work with multiple elements simultaneously, which is essential when validating lists, tables, or repeated UI components. For instance, on a search results page such as Yandex’s, it is possible to use the double dollar sign ( $$ ) operator to obtain all hyperlink elements and perform bulk operations or assertions.

Fig. 6. Example of working with ElementsCollection

Selenide also supports attribute-level verification. A common example includes checking the value of the alt attribute in an image element. The statement $(«img#logo»).shouldHave(attribute(«alt», «Yandex»)) confirms that the logo’s alternate text is correctly specified. This type of assertion is useful for validating accessibility attributes, SEO-related tags, and correctness of dynamically generated UI attributes.

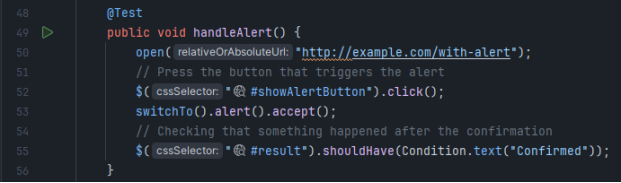

Interaction with JavaScript dialog boxes such as Alert, Confirm, and Prompt is simplified through the use of Selenide’s switchTo().alert() interface. The methods accept() , dismiss(), prompt() , and setValue() allow developers to manipulate alert dialogs without relying on low-level WebDriver calls. This abstraction enhances code clarity and reduces the possibility of synchronization errors.

Fig. 7. Example of working with a dialog box in a test scenario

Selenide’s support for frame navigation also deserves mention. The method switchTo().frame(«frameName») allows switching context into an iframe, while switchTo().defaultContent() returns the context to the main document. These methods are tightly integrated with Selenide’s implicit waiting system, ensuring that the context switch occurs only after the frame becomes available, thereby improving test stability.

Debugging failing test cases is another area where Selenide offers convenience. The method screenshot(String fileName) creates a snapshot of the current browser view and stores it for post-execution analysis. This capability proves valuable when investigating layout issues, visual shifts, or unexpected UI behavior resulting from external factors such as incomplete loading or browser-specific rendering errors.

Moreover, Selenide provides mechanisms to execute setup and teardown logic before and after each test. Although some of these features can be implemented directly within Selenide, it is often recommended to configure them within the TestNG framework using the @BeforeMethod and @AfterMethod annotations. These hooks are used to reset session state, clear cookies, or close browser instances via WebDriverRunner.closeWebDriver() .

In summary, the extended functionality offered by Selenide significantly enhances the expressiveness and robustness of UI tests. Its integrated conditions, support for collections and attributes, streamlined handling of dialogs and frames, and built-in debugging tools make it an effective solution for developing reliable and maintainable automation suites.

Introduction to Selenoid and crossbrowser tests

After local test scenarios are stabilized and validated, the focus often shifts toward executing these tests in parallel and across different web browsers. This requirement emerges frequently in enterprise environments and large-scale testing pipelines, where efficiency and coverage are of paramount importance. Selenoid, a powerful tool developed by the Aerokube team, addresses these needs through containerized browser execution.

Selenoid is a component that extends the capabilities of Selenium Grid by providing a lightweight, Docker-based solution for running browsers in isolated containers. Unlike traditional grid setups, which may involve complex infrastructure, Selenoid simplifies deployment and scaling by utilizing pre-configured Docker images of browsers. This enables consistent test environments and better resource utilization.

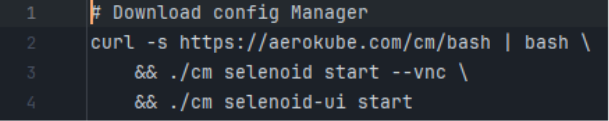

The deployment of Selenoid begins with the installation of Docker and the use of Aerokube’s Container Manager (CM), which assists in automatically downloading the necessary images and generating the configuration files. A typical setup includes a browsers.json configuration file, where each browser version and its associated image is declared. The container manager ensures that the correct containers are launched and terminated on demand.

Fig. 8. Download and start command

One of the notable features of Selenoid is its support for real-time monitoring through the built-in VNC server. By enabling the -- vnc flag during container startup, testers gain access to live sessions of the browser instances. This functionality is accessible either through a dedicated VNC viewer or via the web-based Selenoid UI. The latter provides a convenient graphical interface for tracking test execution, viewing logs, downloading videos, and analyzing screenshots.

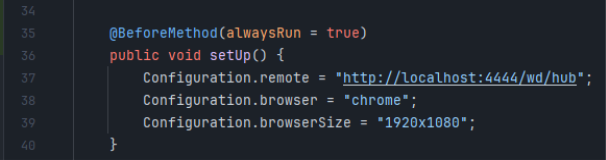

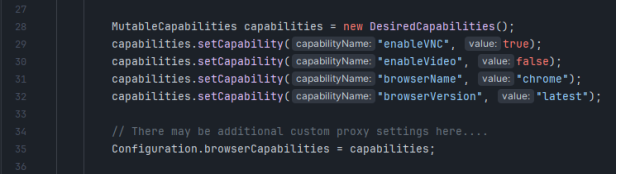

To integrate Selenide-based tests with Selenoid, the tester configures the remote WebDriver URL to point to the active Selenoid hub. This is achieved through the Configuration.remote property in Selenide. Additionally, the desired capabilities, such as browser type and version, are specified to match those defined in browsers.json .

Fig. 9. Selenide configuration for Selenoid access

Cross-browser testing can be further enhanced by leveraging TestNG’s @DataProvider functionality. By creating an array of browser identifiers, such as «chrome» , «firefox» , and «opera» , the same test method can be executed multiple times, each time with a different browser context. During execution, Selenoid dynamically provisions the required browser containers, provided they are available and correctly configured.

Fig. 10. Example of test scenario using @DataProvider for cross-browser testing and running tests on Selenoid

The addition of new browsers, such as Microsoft Edge, involves minimal changes: it is sufficient to add the browser identifier to the data provider array and ensure the corresponding Docker image is present and referenced in the configuration. This modularity and scalability make Selenoid an effective tool for continuous integration and continuous testing environments.

Overall, Selenoid’s architecture allows for efficient distribution of test executions, reduced infrastructure complexity, and improved consistency of test outcomes across browser types. Its compatibility with Docker and Selenium, combined with its ease of setup and real-time monitoring capabilities, make it a valuable component in modern UI automation frameworks.

Peculiarities with safari and ways to resolve difficulties

Among modern web browsers, Safari presents a number of distinct challenges for automated testing, particularly when the goal is to incorporate it into cross-browser pipelines. The limitations stem not only from the architecture of the Safari browser itself, but also from licensing restrictions and technical constraints associated with the macOS operating system.

Unlike Chrome or Firefox, Safari cannot be reliably containerized using standard Docker-based infrastructure. This limitation is primarily due to Apple’s licensing policy, which prohibits the virtualization of macOS on non-Apple hardware. Consequently, Selenoid does not provide official Docker images for Safari, making direct integration into containerized testing frameworks infeasible.

Furthermore, SafariDriver—the official WebDriver implementation for Safari—has historically exhibited instability and feature limitations, particularly on earlier versions of macOS. For instance, inconsistent behavior in headless mode or issues with file upload dialogs have been documented in several Safari releases. These deficiencies complicate the creation of fully automated test pipelines that rely on uniform WebDriver behavior across browsers.

Despite these constraints, several viable approaches exist for incorporating Safari into UI test automation:

- Physical macOS Infrastructure : One of the most reliable methods involves setting up actual macOS machines with Safari installed. After enabling the «Allow Remote Automation» feature within Safari’s Developer menu, these machines can be registered as nodes in a Selenium Grid. While Selenoid manages containerized browsers (e.g., Chrome, Firefox), Safari test cases are routed through the physical macOS node, ensuring compatibility.

- Cloud-Based Testing Services : Platforms such as Sauce Labs, BrowserStack, and similar services provide remote access to real devices and browsers, including various versions of Safari running on macOS. These services offer REST APIs and integration libraries to allow seamless execution of test suites. Although such solutions may introduce additional costs, they eliminate the need for local hardware maintenance and are particularly useful when Safari tests are required only intermittently.

- Visual Regression Tools : In scenarios where functional automation on Safari is infeasible, it is still possible to verify UI rendering correctness through visual comparison. Tools like Percy capture page screenshots rendered in Safari and compare them against reference images. While this approach does not offer end-to-end functional validation, it can still detect layout anomalies, missing elements, and unintended visual regressions.

It is also critical to acknowledge the browser-specific restrictions that affect test design. For example, Safari imposes stricter rules regarding popup handling, file input dialogs, and certain JavaScript-driven actions. Test engineers must account for these behaviors by reviewing and adjusting browser settings and confirming compatibility with WebDriver capabilities. The «Allow Remote Automation» option is often hidden and requires explicit enabling to permit remote control.

Additionally, the evolution of the WebKit rendering engine—on which Safari is based—may introduce API-level changes across browser versions. Therefore, test engineers are advised to consult Apple’s official documentation to verify compatibility and to perform version-specific validations.

In summary, the inclusion of Safari in automated testing requires additional infrastructural and organizational effort compared to other browsers. However, when approached methodically—either through dedicated macOS environments or via cloud platforms—Safari testing can be effectively incorporated into a comprehensive quality assurance strategy.

A few useful subtleties when setting up Selenide+Selenoid

The integration of Selenide with Selenoid introduces a number of configuration nuances that require careful consideration. These subtleties become particularly important in projects where test environments must be stable, observable, and scalable in continuous integration (CI) pipelines or multi-threaded execution contexts.

First, in cases where video recording of test executions is necessary (e.g., for debugging failed tests), it is essential to configure the Selenoid containers with proper volume mounting. Specifically, containers should be launched with the -v /path/to/videos:/opt/selenium/videos option. This ensures that all recorded video files are saved persistently and remain accessible for post-execution analysis.

Secondly, although Selenide is designed to automatically close the browser session upon completion of a test, this behavior may require explicit enforcement in environments involving parallel execution. In such cases, invoking WebDriverRunner.closeWebDriver() explicitly within the @AfterMethod hook of TestNG guarantees that each WebDriver instance is properly terminated and the associated Docker container is released. This practice contributes to more efficient resource management, particularly when Selenoid is configured to run a fixed number of concurrent containers.

Another consideration involves Selenide’s configuration parameters such as Configuration.timeout and Configuration.headless . These settings, while beneficial in local testing environments, may behave differently when applied within containerized Selenoid instances. For example, headless mode can cause discrepancies in drag-and-drop operations or certain UI renderings, as the graphical context differs from fully-rendered environments. In such cases, using VNC-enabled containers helps observe test behavior in real time and identify deviations.

In addition, scenarios may arise where browsers are required to operate behind a proxy or with specific security settings, such as custom certificates or relaxed SSL validation. Selenoid supports the injection of custom capabilities via JSON configuration or dynamically during runtime. These capabilities can be passed to Selenide using the DesiredCapabilities object or through Configuration.browserCapabilities . Examples include proxy settings, browser arguments, and preferences required for internal environments.

Fig. 11. Parameters for running test scenarios for CI

This capabilities object is then used by Selenide when running the test. By the way, TestNG integrates easily with CI systems (Jenkins, TeamCity, GitLab CI, etc.). You just prescribe build and run commands, and at each commit, tests can automatically run in Selenoid, generating progress reports.

It's worth mentioning the convenience of the Selenoid UI as well. It's a web application that clearly shows which tests are currently running, which containers are busy, whether you can watch live video. The interface is friendly and gives testers a quick way to diagnose. You can also see logs, screenshots, and other details there.

In the end, the combination of Selenide and Selenoid gives a powerful base for UI-autotests, allowing testers not to get caught up in the routine of customizing Selenium Grid and constantly writing explicit expectations. The project team gets a flexible, clear system that can be easily maintained and scaled without hassle.

Tips for structuring your project

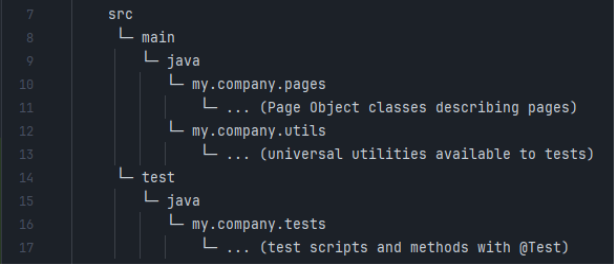

A well-organized project structure is crucial for the scalability, readability, and maintainability of any automated UI testing framework. In large teams or long-term projects, the absence of a consistent directory layout and module separation often leads to code duplication, unclear responsibilities, and inefficient onboarding of new contributors. Java-based projects, including those that use Selenide and Selenoid, benefit greatly from the adoption of standard architectural conventions.

The recommended approach is to separate source code and test code using the conventional Maven or Gradle directory layout:

– src/main/java — this directory should contain infrastructure-related components, including Page Object classes, configuration utilities, browser factories, and any reusable helper methods or constants;

– src/test/java — this directory should include test classes, test data providers, test suites, and any class containing direct assertions or test flows.

Fig. 12. Schematic of a standard automation project

This structure promotes the principle of separation of concerns. By isolating the infrastructure logic from the actual test cases, developers and testers can work in parallel and avoid conflicts. Moreover, this separation allows infrastructure components to be reused across multiple test suites and even different testing layers (e.g., API or performance testing).

Tests should be grouped in a meaningful way, such as by functional modules or use case categories. For example, all tests related to the search functionality on a website could be placed in a dedicated package named search , while tests targeting login and authentication could reside in the auth package. This categorization facilitates selective test execution and improves traceability of test coverage.

Test class names should follow a consistent and descriptive naming convention, such as SearchTests , LoginTests , or UserProfileTests . Similarly, method names should indicate the purpose of the test, e.g., shouldDisplaySearchResultsForValidQuery() or shouldShowErrorMessageForInvalidCredentials() .

It is also recommended to store test data separately in configuration files (e.g., .json , .xml , .yaml ) or resource classes. This practice helps decouple test logic from data and makes the system more adaptable to localization, environment changes, and data-driven testing.

For parameterization and runtime flexibility, configuration values such as browser type, base URL, and timeouts should be passed through environment variables or external property files. Tools like Maven Surefire, Gradle, or CI/CD pipelines can inject these values at runtime without modifying the codebase.

In the context of reporting and diagnostics, structured output folders should be configured to capture test artifacts, such as logs, screenshots, and video recordings (when using Selenoid). These assets are essential for post-execution analysis and debugging.

Finally, documentation should not be overlooked. Each major package and class should contain brief Javadoc-style descriptions to explain its purpose and usage. A high-level README.md file or docs/ folder can describe the setup instructions, execution steps, and environment configuration guidelines.

By adhering to these structural guidelines, teams can ensure that their UI automation projects remain robust, comprehensible, and adaptable to future growth and changes.

A little about performance and parallel running

When the number of automated tests increases significantly, the total execution time begins to grow proportionally. In such cases, it is advisable to consider the implementation of parallel test execution. TestNG provides built-in capabilities for parallelization at the level of classes, methods, or test suites. In parallel, Selenoid operates reliably in multithreaded mode. By specifying a fixed number of concurrent browser containers in the configuration (e.g., 5–10), test execution can be distributed across these containers, thereby improving overall throughput.

However, the implementation of parallel testing introduces certain architectural requirements, particularly with regard to shared resources. If multiple tests access the same database, it becomes necessary to either allocate independent datasets for each thread or apply transactional isolation to ensure rollback of changes after each test execution. Failure to implement such strategies may result in race conditions, test deadlocks, or inconsistent outcomes. These challenges fall under the broader domain of test architecture design and should be addressed at the planning stage.

Another aspect relates to the dynamic provisioning of browser containers. Selenoid creates containers on demand if the predefined pool is insufficient. During mass parallel test runs, a noticeable delay may occur while Docker initializes new containers. To mitigate this, it is a common practice to maintain a warm pool of pre-initialized containers that are always ready for immediate use. Selenoid’s configuration parameters allow such behavior to be fine-tuned based on the expected load and hardware constraints.

In continuous integration and delivery (CI/CD) pipelines, where source code compilation and automated testing are integrated into a unified workflow, parallel execution provides measurable gains. For illustrative purposes, consider a test suite comprising 1,000 individual tests, each taking approximately 10 seconds to complete. When executed sequentially, the total runtime exceeds 10,000 seconds (nearly three hours). With parallelization across 10 containers, the runtime is reduced to roughly 1,000 seconds (approximately 16–17 minutes), representing a significant improvement in feedback loop efficiency.

Nevertheless, the number of parallel threads must be selected judiciously. If the load exceeds the server’s CPU or memory capabilities, performance degradation may occur. Empirical tuning is recommended to determine the optimal concurrency level, with continuous monitoring of resource usage during test execution. Tools such as Docker stats, Grafana, or other infrastructure-level profilers can be employed for this purpose.

Occasionally, specific browsers such as Firefox may exhibit reduced stability under high concurrency. In such cases, it is advisable to allocate fewer threads to these browsers or increase their memory quota. Chrome, in most scenarios, demonstrates greater resilience to parallel execution; however, stability issues cannot be fully ruled out, and behavior should be evaluated in the context of the specific application under test.

In summary, parallel testing constitutes a powerful mechanism for accelerating the delivery of test results. When properly configured, the combined use of TestNG and Selenoid enables flexible control over the number of execution threads and the distribution of workloads across different browsers. This approach supports the development of scalable and high-performance automated testing frameworks aligned with the demands of modern software development lifecycles.

Digression: a few words about cultural aspects in autotests

Before proceeding to the final conclusions, it is appropriate to make a brief digression concerning the influence of cultural and regional factors on the practice of UI test automation. In different countries, students, researchers, and engineers may approach testing methodologies with varying degrees of formality and rigor. In some regions, a pragmatic attitude prevails, where writing minimal, rapid tests is acceptable as long as the functionality is verified. In other academic or industrial contexts, formalized and structured testing practices are emphasized. Regardless of such differences, Selenide and Selenoid remain universally applicable tools. Their focus on stability, expressiveness, and flexibility makes them suitable for usage by undergraduate and graduate students as well as by professionals in countries such as Russia, Germany, or Brazil.

Notably, cultural distinctions may also manifest in browser preferences. In China, for example, users may rely on browsers such as 360 Browser or QQBrowser; in South Korea, Naver Whale is commonly used; and in Japan, some organizations continue to utilize legacy versions of Internet Explorer. While Selenoid does not provide official support for all regional or proprietary browsers, it remains technically feasible to create custom Docker images for such environments. Although this process requires additional configuration and effort, it demonstrates the extensibility of the Selenoid infrastructure.

The purpose of highlighting these nuances is to emphasize that testing strategies should be aligned with the needs and preferences of actual end users. If a significant portion of the product's audience accesses the application through Safari or mobile Chrome, then corresponding test coverage should be prioritized. Conversely, for internal or corporate applications used exclusively with Chrome, test environments can be simplified accordingly. The goal is not to achieve exhaustive browser coverage, but to implement a rational allocation of resources based on the target audience.

This approach to test planning is not a question of abstract «best practices» but rather one of informed decision-making grounded in product-specific usage data. Both Selenide and Selenoid provide sufficient flexibility to support diverse configurations, but it is the responsibility of test engineers to ensure that test coverage aligns with user behavior and technological constraints.

There is ongoing debate among development teams regarding whether to prioritize depth of functional testing or breadth of browser support. There is no universal answer. However, the versatility of tools like Selenide and Selenoid allows teams to effectively support both strategies—comprehensive scenario validation and wide platform compatibility—depending on the project context.

For students engaged in academic coursework or thesis projects, the examples provided in this article can serve as a foundation. Many computer science departments actively encourage the demonstration of practical testing frameworks. Selenide, in particular, is accessible to beginners due to its simplified syntax and encapsulation of Selenium WebDriver operations, which are already present in many educational programs. As such, students are encouraged to experiment with these tools in their academic projects.

For researchers, UI test automation presents multiple directions for further investigation. Areas of interest may include the development of defect prediction heuristics, adaptive wait logic, and the integration of artificial intelligence techniques into automated testing frameworks. Thus, in addition to offering practical value, Selenide and Selenoid open up considerable potential for scientific inquiry and innovation.

Conclusion and recommendations

It is essential to recognize that automated testing is a means of increasing the efficiency and reliability of software development processes, not an end in itself. When properly planned and prioritized, automation contributes significantly to product quality, team productivity, and release stability. Key elements of successful automation include well-defined priorities, carefully structured test scenarios, and an informed approach to browser compatibility. These aspects collectively support the development of reliable and maintainable systems.

Selenide is widely appreciated for its concise and readable syntax, as well as its integrated mechanisms for element waiting and state validation. Selenoid, in turn, offers a lightweight and scalable approach to browser containerization, facilitating parallel execution and resource efficiency. Combined, these tools provide a dependable solution that is equally applicable in educational, research, and industrial contexts.

Java remains a leading language in enterprise environments due to its mature ecosystem, extensive tooling, and large professional community. Its compatibility with tools such as Selenide and Selenoid makes it an optimal choice for constructing scalable, cross-platform UI automation frameworks.

Readers who wish to deepen their understanding of the topics covered in this article are encouraged to explore the supplementary materials listed in the reference section. These include practical case studies, conference presentations, and technical articles that address advanced aspects of working with Selenide, Selenoid, and TestNG. Continued learning and exploration in this field can contribute not only to individual professional development but also to broader advancements in automated software testing methodologies.

References:

- Official Selenide Documentation — URL: https://ru.selenide.org/

- Github-Selenide repository — URL: https://github.com/selenide/selenide

- Aerokube Selenoid — URL: https://aerokube.com/selenoid/latest/

- Report on Selenoid at the SQA Days conference (video) — URL: https://www.youtube.com/watch?v=MbkW9rGcEwU

- The article «Page Object in UI testing» — URL: https://habr.com/ru/post/466009/

- TestNG Documentation — UTL: https://testng.org/doc/

- SafariDriver Features — URL: https://developer.apple.com/documentation/webkit/testing_with_webdriver_in_safari

- Links on working with Docker and Selenium — URL: https://docs.docker.com/get-started/ , https://www.selenium.dev/documentation/grid/

- An example of Allure and Selenide integration — URL: https://docs.qameta.io/allure/#_selenide

- Cloud services for cross-browser testing — URL: https://saucelabs.com/ , https://www.browserstack.com/